Release notes for the Genode OS Framework 11.11

Each Genode release is themed with a predominating topic. Version 11.08 aimed at blurring the lines between the use of different base platforms whereas the predecessor re-addressed inter-process communication. With the current release, we explore the various approaches to virtualization using Genode. At the first sight, this topic sounds like riding a dead horse because virtualization is widely regarded as commodity by now. However, because Genode has virtualization built right in the heart of its architecture, this topic gets a quite different spin. As described in Section A Plethora of Levels of Virtualization, the version 11.11 contains the results of our exploration work about faithful virtualization, paravirtualization, OS-level virtualization, and application-level virtualization. The latter category is particularly unique to Genode's architecture.

Besides elaborating on virtualization, the version 11.11 comes with new features such as support for user-level debugging by the means of the GNU debugger and the ability to run complex interactive UNIX applications on Genode (i.e., VIM). Furthermore, we improved device-driver support, specifically for ARM platforms. Apart from working on features, we had been busy with optimizing several aspects of the framework, improvements ranging from improved build-system performance, over reduced kernel-memory footprint for NOVA, to a new IPC implementation for Linux.

For regular Genode developers, one of the most significant changes is the new tool chain based on GCC 4.6.1. The background story about the tool-chain update and its implications is described in Section [New tool chain based on GCC 4.6.1].

A Plethora of Levels of Virtualization

Virtualization has become a commodity feature universally expected from modern operating systems. The motivations behind employing virtualization techniques roughly fall into two categories: the re-use of existing software and sandboxing untrusted code. The latter category is particularly related to the Genode architecture. The Genode process tree is essentially a tree of nested sandboxes where each node is able to impose policy on its children. This immediately raises the question of where Genode's design could take us when combined with existing virtualization techniques. To explore the possible inter-relationships between Genode and virtualization, we conducted a series of experiments. We found that Genode's recursive architecture paves the way to far more flexible techniques than those we know from existing solutions.

Faithful x86 PC Virtualization enabled by the Vancouver VMM

Most commonly, the term virtualization refers to faithful virtualization where an unmodified guest OS is executed on a virtual hardware platform. Examples of such virtual machines are VMware, VirtualBox, KVM, and Xen - naming only those that make use of hardware virtualization capabilities. Those established technologies have an impressive track record on the first of both categories mentioned above - software re-use. However, when it comes to sandboxing properties, we find that another virtual machine monitor (VMM) implementation outshines these established solutions, namely the Vancouver virtual machine monitor executed on top of the NOVA hypervisor. Combined, NOVA and Vancouver are able to slash the complexity of the trusted computing base (TCB) for isolating virtual machines to a tiny fraction compared to the established products. The key is the poly-instantiation of the relatively complex virtual machine monitor with each virtual machine. Each VMM is executed within a dedicated protection domain. So a problem in one VM can only affect its respective Guest OS but not any other VM. By executing the VMM outside of the hypervisor, the hypervisor's complexity is dramatically smaller than traditional hypervisors. Hence, the authors of NOVA coined the term microhypervisor.

The NOVA virtualization architecture is detailed in the paper NOVA: A Microhypervisor-Based Secure Virtualization Architecture by Udo Steinberg and Bernhard Kauer.

Since February 2010, NOVA is one of the supported base platforms of Genode. But until now, Vancouver has been tied to a specialized user land that comes with NOVA. For the current Genode release, we took the chance to adopt this technology for Genode.

|

|

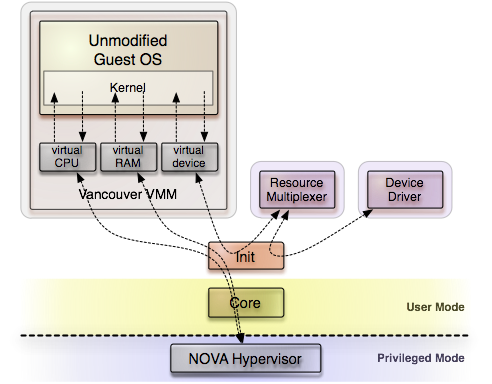

The Vancouver virtual machine monitor executed as Genode component

|

On Genode, the Vancouver VMM is executed as a normal Genode process. Consequently, any number of VMM instances can be started either statically via the init process or dynamically. By bringing Vancouver to Genode we are able to combine the high performance and secure design of Vancouver with the flexibility of Genode's component architecture. By combining Genode's session routing concept with resource multiplexers, virtual machines can be connected to one another as well as to Genode components.

That said, the current stage of development is still highly experimental. But it clearly shows the feasibility of performing faithful virtualization naturally integrated with a Genode-based system. For more technical details about the porting work, please refer to Section Vancouver virtual machine monitor;

Android paravirtualized

Since 2009, Genode embraced the concept of paravirtualization for executing unmodified Linux applications by the means of the OKLinux kernel. This special variant of the Linux kernel is modified to run on top of the OKL4 microkernel. With the added support for the Fiasco.OC kernel as base platform, the use of L4Linux as another paravirtualized Linux variant became feasible. L4Linux has a long history reaching back to times long before the term paravirtualization was eventually coined. In contrast to faithful virtualization, paravirtualization devises modifications of the kernel of the Guest OS but preserves binary compatibility of the Guest's user-level software. The proponents of this approach cite two advantages over faithful virtualization: better performance and independence from virtualization-hardware support. For example, L4Linux is available on ARM platforms with no virtualization support.

L4Linux is the base of L4Android, a project that combines the Android software stack with L4Linux. With the current release, we have integrated L4Android with Genode.

- https://l4android.org

-

L4Android project website

|

|

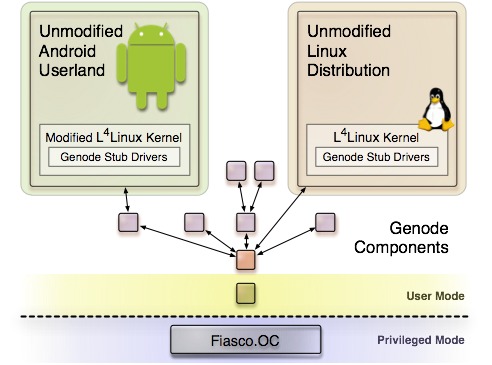

Android, a Linux distribution, and a process tree of Genode components running side by side

|

As illustrated in the figure above, multiple Linux instances of both types Android and plain Linux can be executed as nodes of the Genode process tree. For their integration with the Genode environment, we extended the L4Linux kernel with custom stub drivers that make Genode's session interfaces available as virtual devices. These virtual devices include NIC, UART, framebuffer, block, keyboard, and pointer devices. Our work on L4Linux is explained in more detail in Section L4Linux / L4Android.

OS-level Virtualization using the Noux runtime environment

Noux is our take on OS-level virtualization. The goal is to be able to use the wealth of command-line-based UNIX software (in particular GNU) on Genode without the overhead of running and maintaining a complete guest OS, and without changing the original source code of the UNIX programs. This work is primarily motivated by our ongoing mission to use Genode as development environment.

|

|

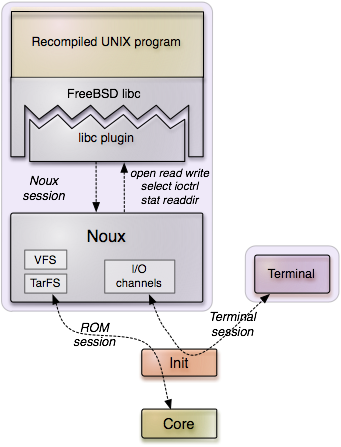

The Noux runtime environment for UNIX software. The program is linked against a custom libc plugin that directs system calls over an RPC interface to the Noux server. The RPC interface resembles a traditional UNIX system-call API.

|

The Noux approach is to provide the traditional UNIX system call interface as an RPC service, which is at the same time the parent of the UNIX process(es) to execute. The UNIX process is linked against the libc with a special back end (libc plugin) that maps libc functionality to Noux RPC calls. This way, the integration of the UNIX program with Noux is completely transparent to the program. Because Noux plays the rule of a UNIX kernel, it has to implement typical UNIX functionality such as a virtual file system (VFS). But in contrast to a real kernel, it does not comprise any device drivers and protocol stacks. As file system, we currently use TAR archives that Noux obtains from core's ROM service. The content of such a TAR archive is exposed to the UNIX program as file system.

One of the most prevalent pieces of UNIX software we spend the most of the day with is VIM. Hence, we set the goal for this release to execute VIM via Noux on Genode. This particular program is interesting for several technical reasons as well. First, in contrast to most command-line tools such as coreutils, it is interactive and implements its event loop via the select system. This provided us with the incentive to implement select in Noux. Second, with far more than 100,000 lines of code, VIM is not a toy but a highly complex and advanced UNIX tool. Third, its user interface is based on ncurses, which requires a fairly complete terminal emulator. Consequently, conducting the development of Noux implied working with and understanding several components in parallel including Noux itself, the libc, the Noux libc plugin, ncurses, VIM, and the terminal emulator. Our undertaking was successful. We are now able to run VIM without modifications and manual porting work on Genode.

The novelty of Noux compared to other OS-level virtualization approaches such OpenVZ and FreeBSD jails lies in the degree of isolation it provides and the simplicity of implementation. Noux instances are isolated from each other by microkernel mechanisms. Therefore the isolation between two instances does not depend on a relatively large kernel but on an extremely small trusted computing base of less than 35,000 lines of code. At the same time, the implementation turned out to be strikingly simple. Thanks to the existing APIs provided by the Genode framework, the Noux server is implemented in less than 2,000 lines of code. We are thrilled to learn that this low amount of code suffices to bring complex UNIX software such as VIM to life.

For more technical details about our work on this topic, please refer to the Sections Noux and Framebuffer-based virtual terminal and ncurses.

GDB debugging via application-level virtualization

The work described in the previous sections was motivated with the desire to re-use existing software on Genode. This section explores a creative use of sandboxing facilitating the Genode architecture.

User-level debugging is a feature that we get repeatedly asked about. Until now, the answer was pretty long-winded because we use different debugging facilities on different kernels. However, none of those facilities are comparable to the convenient debugging tools we know from commodity OSes. For a long time, we were hesitant to build in debugging support into Genode because we were afraid to subvert the security of Genode by adding special debug interfaces short-circuiting security policies. Furthermore, none of the microkernels we love so dearly featured support for user-level debugging. So we deferred the topic. Until now. With GDB monitor, we have found an approach to user-level debugging that is not only completely in line with Genode's architecture but capitalizes it. Instead of adding special debugging interfaces to low-level components such as the kernel and core, we use an approach that we call application-level virtualization.

|

|

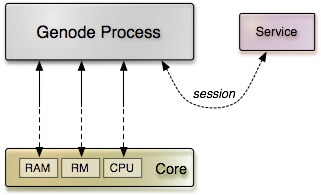

A Genode process uses low-level services provided by core as well as a higher-level service implemented as separate process component.

|

Each Genode process interacts with several low-level services provided by Genode's core, in particular the RAM service for allocating memory, the CPU service to create and run threads, and the RM service to manage the virtual address space of the process. However, the process does not contact core directly for those services but requests them via its chain of parents (i.e, the init process). This gives us the opportunity to route session requests for those services to alternative implementations.

|

|

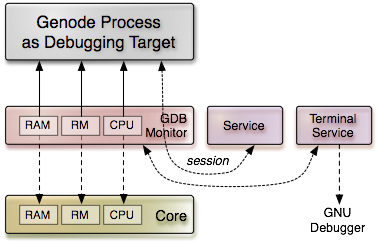

GDB monitor transparently intercepts the interaction of a Genode process with its environment. By virtualizing fundamental core services, GDB monitor exercises full control over the debugging target. GDB monitor, in turn, utilizes a separate service component to establish a terminal connection with a remote GDB.

|

When running a Genode process as debugging target, we place an intermediate component called GDB monitor between the process and its parent. Because GDB monitor is now the parent of the debugging target, all session requests including those for core services are issued at GDB monitor. Instead of routing those session requests further down the tree towards core, GDB monitor provides a local implementation of these specific services and can thereby observe and intercept all interactions between the process and core. In particular, GDB monitor gains access to all memory objects allocated from the debugging target's RAM session, it knows about the address space layout, and becomes aware of all threads created by the debugging target. Because, GDB monitor does possess the actual capabilitiy to the debugging target's CPU session, it can execute control over those threads, i.e., pausing them or enabling single-stepping. By combining the information about the debugging target's address space layout and the access to its memory objects, GDB monitor is even able to transparently change arbitrary memory in the debugging target, for example, inserting breakpoint instructions into its text segment.

Thanks to application-level virtualization, the once deemed complicated problem of user-level debugging has become straight forward to address. The solution fits perfectly into the Genode concept, maintains its security, and does not require special-purpose debugging hooks in the foundation of the operating system.

To get a more complete picture about our work on GDB monitor, please read on at Section GDB monitor.

Base framework, low-level OS infrastructure

Handling CPU exceptions at user level

To support user-level policies of handling CPU exceptions such as division by zero or breakpoint instructions, we have added support for reflecting such exceptions to the CPU session interface. The owner of a CPU session capability can register a signal handler for each individual thread allocated from the session. This handler gets notified on the occurrence of an exception caused by the respective thread similar to how page faults are reflected to RM sessions. The CPU exception handling support has been implemented on the Fiasco.OC and OKL4 base platforms.

Remote access to thread state

Closely related to the new support for handling CPU exceptions, we have extended the information that can be gathered for threads of a CPU session to general-purpose register state and the type of the last exception caused by the thread. Furthermore, the CPU session interface has been extended to allow the pausing and resumption of threads as well as single-stepping through CPU instructions (on x86 and ARM).

All those additions are subject to capability-based security so that only the one who possesses both a CPU-session capability and a thread capability can access the thread state. This way, the extension of the CPU session interface opens up a lot of new possibilities (especially regarding debugging facilities) without affecting the security of the overall system.

Improved signaling latency

During the time span of the last two years, we have observed a steadily growing number of use cases for Genode's signaling framework. Originally used only for low-frequent notifications, the API has become fundamental to important Genode mechanisms such as the data-flow protocol employed by the packet-stream interface, the on-demand fault handling performed by dataspace managers, and CPU exception handling. This observation provided us with the incentive to improve the latency of delivering signals by placing signal delivery into a dedicated thread within core.

Optimization for large memory-mapping sizes

Some kernels, in particular NOVA, are optimized to deal with flexible mapping sizes that are independent of physical page sizes. The internal representation of memory mapping independent on page sizes can greatly reduce the memory footprint needed to keep track memory mappings, but only if large mappings are used. In practice, mapping sizes are constrained by their sizes and their alignment in both physical and virtual address spaces. To facilitate the use of large mappings with NOVA, we have optimized core for the use of large mappings. Core tries to size-align memory objects in both physical memory and virtual memory (core-local as well as within normal user processes). This optimization is completely transparent to the Genode API but positively affects the page-fault overhead throughout the system, most noticeably the NPT-fault overhead of the Vancouver VMM.

Standard C++ library

To accommodate users of the standard C++ library, we used to host the stdcxx library to the libc repository. Apparently, there are use cases for stdcxx without Genode's C library, in particular for hybrid Linux/Genode programs that are linked against the host's glibc anyway. So we decided to move the stdcxx to the base repository. So if you are needing stdcxx but are not using Genode's libc, enabling the base repository in your build configuration suffices.

Terminal-session interface

We revised the terminal session interface by adding a simple startup-protocol for establishing terminal connections: At session-creation time, the terminal session may not be ready to use. For example, a TCP terminal session needs an established TCP connection first. However, we do not want the session-creation to be blocked on the server side because this would render the server's entry point unavailable for all other clients until the TCP connection is ready. Instead, we deliver a connected signal to the client emitted when the session becomes ready to use. The Terminal::Connection waits for this signal at construction time. Furthermore, we extended the terminal session interface with the new avail() and size() functions. The avail() function can be used to query for the availability of new characters. The size() function returns the size of the terminal (number of rows and columns).

Dynamic linker

The dynamic linker underwent several changes in its memory management. Since the original FreeBSD implementation heavily relies on UNIX-like mmap semantics, which up to this point was poorly emulated using Genode primitives, we abandoned this emulation completely. In the current setup the linker utilizes Genode's managed-dataspace concept in order to handle the loading of binaries and shared libraries and thus implements the required memory management correctly.

Also the linker has been adapted to Genode's new GCC 4.6.1 tool chain, which introduced new relocation types on the ARM platform.

Libraries and applications

C runtime

We have updated our FreeBSD-based C library to version 8.2.0 and thereby changed the way how the libc is integrated with Genode. Instead of hosting the 3rd-party code as part of the Genode source tree in the form of the libc repository, the libc has now become part of the libports repository. This repository does not contain the actual 3rd-party source code but only the rules of how to download and integrate the code. These steps are fully automated via the libports/Makefile. Prior using the libc, please make sure to prepare the libc package within the libports repository:

cd <genode-dir>/libports make prepare PKG=libc

Vancouver virtual machine monitor

Vancouver is a virtual machine monitor specifically developed for the use with the NOVA hypervisor. It virtualizes a 32-bit x86 PC hardware including various peripherals.

The official project website is https://hypervisor.org. Vancouver relies on hardware virtualization support such as VMX (Intel) or SVM (AMD). With the current release, we have added the first version of our port of Vancouver to Genode to the ports repository. To download the Vancouver source code, simply issue the following command from within the ports repository:

make prepare PKG=vancouver

The glue code between Vancouver and Genode is located at ports/src/vancouver/. At the current stage, the port is not practically usable but it demonstrates well the general way of how the VMM code is meant to interact with Genode.

In contrast to the original Vancouver, which obtains its configuration from command-line arguments, the Genode version reads the virtual machine description as XML data supplied via Genode's config mechanism. For each component declared within the <machine> node in the config XML file, a new instance of a respective device model or host driver is instantiated. An example virtual machine configuration looks like this:

<machine>

<mem start="0x0" end="0xa0000"/>

<mem start="0x100000" end="0x2000000"/>

<nullio io_base="0x80" />

<pic io_base="0x20" elcr_base="0x4d0"/>

<pic io_base="0xa0" irq="2" elcr_base="0x4d1"/>

<pit io_base="0x40" irq="0"/>

<scp io_port_a="0x92" io_port_b="0x61"/>

<kbc io_base="0x60" irq_kbd="1" irq_aux="12"/>

<keyb ps2_port="0" host_keyboard="0x10000"/>

<mouse ps2_port="1" host_mouse="0x10001"/>

<rtc io_base="0x70" irq="8"/>

<serial io_base="0x3f8" irq="0x4" host_serial="0x4711"/>

<hostsink host_dev="0x4712" buffer="80"/>

<vga io_base="0x03c0"/>

<vbios_disk/>

<vbios_keyboard/>

<vbios_mem/>

<vbios_time/>

<vbios_reset/>

<vbios_multiboot/>

<msi/>

<ioapic/>

<pcihostbridge bus_num="0" bus_count="0x10" io_base="0xcf8"

mem_base="0xe0000000"/>

<pmtimer io_port="0x8000"/>

<vcpu/> <halifax/> <vbios/> <lapic/>

</machine>

The virtual BIOS of Vancouver has built-in support for loading an OS from multiboot modules. On Genode, the list of boot modules is supplied with the <multiboot> node:

<multiboot> <rom name="bootstrap"/> <rom name="fiasco"/> <rom name="sigma0.foc"/> <rom name="core.foc"/> </multiboot>

This configuration tells Vancouver to fetch the declared boot modules from Genode's ROM service.

For the current version, we decided to support SVM-based hardware only for the mere reason that it is well supported by recent Qemu versions. At the time of the release, the SVM exit conditions CPUID, IOIO, MSR, NPT, INVALID, STARTUP are handled. Of those conditions, the handling of NPT (nested page table) faults was the most challenging part because of the tight interplay between the NOVA hypervisor and the VMM at this point.

With this code in place, Vancouver is already able to boot unmodified L4ka::Pistachio or Fiasco.OC kernels. Both kernels print diagnostic output over the virtual comport and finally wait for the first timer interrupt.

Even though the current version has no practical use yet, it is a great starting point to dive deeper into the topic of faithful virtualization with Genode. For a quick example of running Vancouver, please refer to the run script at ports/run/vancouver.run.

We want to thank Bernhard Kauer and Udo Steinberg for having been extremely responsive to our questions, making the realization of this first version possible at a much shorter time frame than we expected.

TCP terminal

The new TCP terminal located at gems/src/server/tcp_terminal is a service that provides Genode's terminal-session interface via individual TCP connections. It supports multiple clients. The TCP port to be used for each client is defined as session policy in the config node of the TCP terminal server:

<config> <policy label="client" port="8181"/> <policy label="another_client" port="8282"/> </config>

For an example of how to use the TCP terminal, please refer to the run script at gems/run/tcp_terminal.run.

Framebuffer-based virtual terminal and ncurses

We significantly advanced the implementation of our custom terminal emulator to be found at gems/src/server/terminal. The new version supports all terminal capabilities needed to display and interact with ncurses-based applications (e.g., VIM) including support for function keys, colors, key repeat, and multiple keyboard layouts. The us-english keyboard layout is used by default. The German keyboard layout can be enabled using the <keyboard> config node:

<config> <keyboard layout="de"/> </config>

As built-in font, the terminal uses the beautiful Terminus monospaced font.

Our port of the ncurses library that comes as libports package has become fully functional when combined with the terminal emulator described above. It is tuned to use the Linux terminal capabilities.

Noux

Noux is a runtime environment that imitates the behavior of a UNIX kernel but runs as plain Genode program. Our goal for this release was to be able to execute VIM with no source-code modifications within Noux.

For pursuing this goal, we changed the I/O back end for the initial file descriptors of the Noux init process to use the bidirectional terminal-session interface rather than the unidirectional LOG session interface. Furthermore, as VIM is an interactive program, it relies on a working select call. So this system call had to be added to the Noux session interface. Because select is the first of Noux system calls that is able to block, support for blocking Noux processes had to be implemented. Furthermore, we improved the handling of environment variables supplied to the Noux init process, the handling of certain ioctl functions, and hard links in TAR archives that Noux uses as file system.

We are happy to report that thanks to Noux we have become able to run VIM on Genode now! If you like to give it a spin, please try out the run script ports/run/noux_vim.run.

GDB monitor

Support of user-level debugging is a topic we get repeatedly asked about. It is particularly hard because the debugging facilities exposed by operating systems highly depend on the respective kernel whereas most open-source microkernels offer no user-level debugging support at all.

The current release will hopefully make the life of Genode application developers much easier. Our new GDB monitor component allows GDB to be connected to an individual Genode process as a remote target (via UART or TCP connection). Thereby, a wide range of convenient debugging features become available, for example

-

The use of breakpoints,

-

Single-stepping through assembly instructions or at function level,

-

Source-level debugging,

-

Printing of backtraces and call-frame inspection, and

-

Observing different threads of a Genode process

Both statically linked programs as well as programs that use shared libraries are supported, which we see as a significant improvement over the traditional debugging tools. Please note, however, that, at the current stage, the feature is only supported on a small subset of Genode's base platforms (namely Fiasco.OC and partially OKL4). We will extend the support to other platforms depending on public demand.

The work on GDB monitor required us to approach the topic in a quite holistic manner, adding general support for user-level debugging to the Fiasco.OC kernel as well as the Genode base framework, componentizing the facility for bidirectional communication (terminal session), and creating the actual GDB support in the form of the GDB monitor component. To paint a complete picture of the scene, we have added the following documentation:

- https://genode.org/documentation/developer-resources/gdb

-

User-level debugging on Genode

L4Linux / L4Android

Update to kernel version 3.0

We have updated our modified version of the L4Linux kernel to support the latest L4Linux kernel version 3.0.0. Moreover, we fixed some issues with the kernel's internal memory management under ARM. Please don't forget to issue a make prepare in the ports-foc directory, before giving the new version a try.

Stub-driver support

With this release, we introduce a bunch of new stub-drivers in L4Linux. These stubs enable the use of Genode's NIC, terminal, and block services from Linux applications. To use a Genode session from Linux, it's sufficient to provide an appropriate service to the Linux instance. The stub drivers for these services simply try to connect to their service and register corresponding devices if such a service exists. For example, if a NIC session is provided to Linux, it will provide an ethernet device named eth0 to Linux applications. For terminal sessions, it will provide a serial device named ttyS0.

In contrast to the NIC and terminal stub drivers, the block driver supports the usage of more than one block session. Therefore, you have to state the name of the individual block devices in the configuration. So, it is possible to state which block device in Linux gets routed to which block session. An example L4Linux configuration may look like follows

<start name="l4linux">

<resource name="RAM" quantum="512M"/>

<config args="mem=128M console=ttyS0>

<block label="sda"/>

<block label="sdb"/>

</config>

<route>

<service name="Block">

<if-arg key="label" value="l4android -> sda"/> <child name="rootfs"/>

</service>

<service name="Block">

<if-arg key="label" value="l4android -> sdb"/> <child name="home"/>

</service>

...

</route>

</start>

The configuration provides two block devices in Linux named sda and sdb, whereby the first one gets routed to a child of init named rootfs and the second to a child named home. Of course, both children have to provide the block session interface.

L4Android

Besides the support of L4Linux, Genode provides support for a slightly changed L4Linux variant called L4Android, that combines L4Linux with the Android kernel patches. To give Android on top of Genode a try, you need to prepare your ports-foc directory to contain the L4Android contrib code by issuing:

make prepare TARGET=l4android

After that, a run script can be used to build all necessary components, download the needed Android userland images, assemble everything, and start the demo via Qemu:

make run/l4android

Beforehand, the dde_ipxe and ports-foc repositories must be enabled in the etc/build.conf file in your build-directory.

Although, in general Android runs on top of Genode, it seems that the whole run-time overhead of (no-kvm) full emulation, para-virtualized Linux and Java, makes it almost unusable within Qemu. Therefore, we strongly advise you to use the Android version for x86 and turn on KVM support (at least partially). This can be configured in the etc/tools.conf file in your build directory by adding the following line:

QEMU_OPT = -enable-kvm

Unfortunately, it very much depends on your Qemu version and configuration, whether Fiasco.OC runs problem-free with KVM support enabled. If you encounter problems, try to use a limited KVM option with Qemu, like -no-kvm-irqchip, or -no-kvm-pit instead of -enable-kvm.

Device drivers

Device-driver environment for iPXE network drivers

Genode used network-card driver code from Linux 2.6 and gPXE. Unfortunately, the gPXE project seems not very active since mid-2010 and the Linux DDE shows its age with our new tool chain. Therefore, we switched to the iPXE project, the official successor of gPXE, for our NIC drivers. Currently, drivers for Intel E1000/E1000e and PCnet32 cards are enabled in the new nic_drv. The Linux based driver was removed.

- https://ipxe.org

-

iPXE open source boot firmware

PL110 display driver

The PL110 driver was reworked to support both the PL110 and PL111 controller correctly. Moreover, it now runs not only within Qemu, but on real hardware too. At least it is tested on ARM's Versatile Express evaluation board.

UART driver

The first version of the PL011 UART driver implemented the LOG interface to cover the primary use case of directing LOG output to different serial ports. However, with the addition of GDB support with the current release, the use for UARTs goes beyond unidirectional output. To let GDB monitor communicate with GDB over a serial line, bidirectional communication is needed. Luckily, there is a Genode interface for this scenario already, namely the terminal-session interface. Hence, we changed the PL011 UART driver to provide this interface type instead of LOG. In addition, we added an UART driver for the i8250 controller as found on PC hardware.

Both UART drivers can be configured via the Genode's config mechanism to route each communication stream to a specific port depending on the session label of the client. The configuration concept is documented in os/src/drivers/uart/README. For a ready-to-use example of the UART drivers please refer to the run script os/run/uart.run.

Platform support

NOVA Microhypervisor version 0.4

Genode closely follows the development of the NOVA hypervisor. With version 0.4, NOVA more and more develops toward a full-featured and production ready hypervisor. Therefore little effort was needed to enable Genode 11.11 on the current NOVA kernel. The few changes include a new hypercall (SC control), capability-based assignment of GSIs (Global System Interrupts), and support for revoke filtering. Also, we improved Genode's page-mapping behavior to take advantage of large flexpage sizes.

Fiasco.OC microkernel

Update to revision 38

With the current release we switched to the latest available Fiasco.OC kernel version (revision 38). After having some timing issues on different ARM platforms with Fiasco.OC in the past, we applied a small patch to correct the timing behavior of the kernel to the current version. The patch is applied automatically if executing make prepare in the base-foc repository.

Querying and manipulating remote threads

To support usage of GDB on top of Genode for Fiasco.OC, several enhancements were developed. First, the thread state accessible via the CPU-session interface has been extended to reflect the whole register set of the corresponding thread depending to the underlying hardware platform. Moreover, you can now stop and resume a thread's execution. On the x86 platform, it has become possible to enable single-stepping mode for a specific thread. All exceptions a thread raises are now reflected to the exception handler of this thread. The exception handler can be set via the CPU-session interface. To support stop/resume, single stepping and breakpoints on ARM, we had to modify the Fiasco.OC kernel slightly. All patches to the Fiasco.OC kernel can be found in base-foc/patches and are automatically applied when issuing 'make prepare'.

Versatile Express Cortex-A9x4

With the current release, we introduce support for a new platform on top of Fiasco.OC. The ARM Versatile Express evaluation board with Coretile Cortex A9x4 now successfully executes the well known Genode demo - have a look at the os/run/demo.run run-script. Unfortunately, this platform is not supported by most Qemu versions shipped with Linux distributions. Furthermore, our tests with a Qemu variant that supports Versatile Express and the Fiasco.OC kernel were not successful. Nevertheless, on a real machine the demo is working nicely. Other drivers such as PL111 and the mouse driver are untested yet.

Linux

New IPC implementation based on UNIX domain sockets

On Linux, Genode processes used to communicate via UDP-Sockets. So, any IPC message could be addressed individually to a specific receiver without establishing a connection beforehand. However, Genode was never designed to work transparently over the network and UDP seems overkill for our requirements. With this release, we tackled this fact by adapting the IPC framework to use UNIX domain sockets.

The design uses two sockets per thread - client and server socket. The server socket is used to receive requests from any client on Ipc_server::_wait and accessible as ep-<thread id>-server, just as the former UDP port. After processing the request, the server replies to the requesting client and returns to wait state. On Ipc_client::_call, the client socket is used to send the request and receive the response.

This sounds trivial but marks an important step away from IPC istream and ostream based design to explicit handling of RPC client and server roles. Linux (as well as other platforms) will benefit from this in planned future changes.

During the design, we also addressed two other issues:

Child processes in Linux inherit open file descriptors from their parents. Therefore, children further down the Genode tree formerly had plenty of open files. The current implementation uses the close-on-exec flag to advise the Linux kernel to close the marked file descriptors before executing the new program on execve().

Genode resources appeared in the file system just under /tmp, e.g., dataspaces as ds-<user id>-<count>. This always filled the global temp directory with dozens of Genode-specific files. Now, Genode resources are located in /tmp/genode-<user id>.

Support for manually managing local sub address spaces

On microkernel-based platforms, Genode provides a powerful mechanism to manage parts of a process' address space remotely (from another process called dataspace manager) or locally. In contrast to those platforms, however, the concept of managed dataspaces is not available on Linux because this kernel lacks a mechanism to remotely manipulate address spaces. Naturally, in the world of monolithic kernels, it is the task of the kernel to manage the virtual address spaces of user-level programs.

The uses of managed dataspaces roughly fall into two categories: the provision of virtual memory objects (dataspaces) in an on-demand-paged fashion and the manual management of a part of the local address space. An example for the latter is the manual management of the thread context area, which must be kept clean of arbitrary dataspace mappings. Another use case is the manual placement of shared-library segments within the local address space. Traditionally, we had to address those problems with special cases on Linux. To support these use cases in a generic way, we enhanced the memory management support for the Linux base platform to support so-called sub-RM sessions in the local address space. A sub RM session is the special case of a managed dataspace that is used only local to the process and populated eagerly (not on-demand paged). With local sub-RM sessions, a large part of the virtual memory of the process can be reserved for attaching dataspaces at manually defined offsets.

The new example base/src/test/sub_rm illustrates the use of sub RM sessions. The example works across all platforms. It can be executed using the run script base/run/sub_rm.run.

Improved handling of hybrid Linux/Genode programs

Hybrid Linux/Genode programs are a special breed of programs that use both the Genode API and Linux libraries found on the host Linux system. Examples for such programs are the libSDL-based framebuffer driver, the ALSA-based audio driver, and the tun/tap-based NIC driver - generally speaking, components that interface Genode with the Linux environment. Because we expected those kind of programs to be rare, not much attention was paid to make the creation of this class of programs convenient. Consequently, the target-description files for them were rather clumsy. However, we discovered that Genode can be used as a component framework on Linux. In such a scenario, hybrid Linux/Genode programs are the normal case rather than an exception, which prompted us to reconsider the role of hybrid Linux/Genode programs within Genode. The changes are:

-

The new lx_hybrid library takes care of the peculiarities of the linking stage of a hybrid program. In contrast to a plain Genode component, such a program is linked with the host's linker script and uses the host's dynamic linker.

-

To support the common case of linking host libraries to a hybrid program, we introduced the new LX_LIBS variable. For each library listed in LX_LIBS, the build system queries the library's linking requirements using pkg-config.

-

The startup procedure differs between normal Genode programs and hybrid programs. Plain Genode programs begin their execution with Genode's startup code, which performs the initialization of the address space, executes all static global constructors, and calls the main function. In contrast, hybrid programs start their execution with the startup code of the host's libc, leaving the Genode-specific initializations unexecuted. To make sure that these initializations are performed prior any application code, the lx_hybrid library contains a highly prioritized constructor called lx_hybrid_init that performs those initializations as a side effect.

L4ka::Pistachio microkernel

The Pistachio kernel recently moved from the Mercurial to the Git (on Github) version control system. We have updated the configuration and build process of the kernel and user-land tools within Genode accordingly.

Genode's 11.11 tool chain also required an adaption of the system call bindings on x86. This was caused by a change of GCC inline assembler input constraint handling. GCC now passes memory constraints using stack-relative addressing, which can lead to serious problems when manipulating the stack pointer within the assembly code.

Build system and tools

New tool chain based on GCC 4.6.1

Early on in the genesis of Genode, we introduced a custom tool chain to overcome several problems inherent to the use of standard tool chains installed on Linux host platforms.

First, GCC and binutils versions vary a lot between different Linux systems. Testing the Genode code with all those different tool chains and constantly adapting the code to the peculiarities of certain tool-chain versions is infeasible and annoying. Second, Linux tool chains use certain features that stand in the way when building low-level system components. For example, the -fstack-protector option is enabled by default on some Linux distributions. Hence, we have to turn it off when building Genode. However, some tool chains lack this option. So the attempt to turn it off produces an error. The most important problem with Linux tool chains is the dependency of their respective GCC support libraries on the glibc. When not using a Linux glibc, as the case with Genode, this leads to manifold problems, most of them subtle and extremely hard to debug. For example, the support libraries expect the Linux way of implementing thread-local storage (using segment registers on x86_32). This code will simply crash on other kernels. Another example is the use of certain C-library functions, which are not available on Genode. Hence, Genode provides custom implementations of those functions (in the cxx library). Unfortunately, the set of functions used varies across tool-chain versions. For these reasons, we introduced a custom configured tool chain where we mitigated those problems by pinning the tools to certain versions and tweaking the compiler configuration to our needs (i.e., preventing the use of Linux TLS).

That said, the use a our custom configured tool chain was not free from problems either. In particular, the script for creating the tool chain relied on a libc being present on the host system. The header files of the libc would be used to build the GCC support libraries. This introduced two problems. When adding Genode's libc to the picture, which is based on FreeBSD's C library, the expectations of the GCC support libraries did not match 100% with the semantics implemented by Genode's libc (e.g., the handling of errno differs). The second problem is the limitation that the tool chain could only be built for the platform that corresponds to the host. For example, on a Linux-x86_32 system, it was not possible to build a x86_64 or ARM tool chain. For this reason we used the ARM tool chains provided by CodeSourcery.

With Genode 11.11, we addressed the root of the tool-chain problem by completely decoupling the Genode tool chain from the host system that is used to build it. The most important step was the removal of GCC's dependency on a C library, which is normally needed to build the GCC support libraries. We were able to remove the libc dependency by sneaking-in a small custom libc stub into the GCC build process. This stub comes in the form of the single header file tool/libgcc_libc_stub.h and brings along all type definitions and function declarations expected by the support-library code. Furthermore, we removed all GNU-specific heuristics from the tool chain. Technically, the Genode tool chain is a bare-metal tool chain. But in contrast to existing bare-metal tool chains, C++ is fully supported.

With the libc dependency out of the way, we are now free to build the tool chain for arbitrary architectures, which brings us two immediate benefits. We do no longer have to rely on the CodeSourcery tool chain for ARM. There is now a genode-arm tool chain using the same compiler configuration as used on x86. The second benefit is the use of multiarch libs on the x86 platform. The genode-x86 tool chain can be used for both x86_32 and x86_64, the latter being the default.

The new tool-chain script is located at tool/tool_chain. It takes care of downloading all components needed and building the tool chain. Currently, the tool chain supports x86 and ARM. Note that the tool_chain script can be executed in an arbitrary directory. For example, to build the Genode tool chain for x86 within your /tmp/ directory, use the following commands:

mkdir /tmp/tool_chain cd /tmp/tool_chain <genode-dir>/tool/tool_chain x86

After completing the build, you will be asked for your user password to enable the installation of the result to /usr/local/genode-gcc/.

Since we introduced GDB support into Genode, we added GDB in addition to GCC and binutils to the Genode tool chain. The version is supposed to match the one expected by Genode's GDB facility, avoiding potential problems with mismatching protocols between GDB monitor and GDB.

Optimization of the library-dependency build stage

The Genode build process consists of two stages. The first stage determines inter-library dependencies using non-parallel recursive make. It traverses all targets to be built and the libraries those targets depend on, and stores the dependency information between libraries and targets in the file <build-dir>/var/libdeps. This generated file contains make rules to be executed in the second build stage, which performs all compilation and linking steps. In contrast to the first stage, the work-intensive second stage can be executed in parallel using the -j argument of make. Because of the serialization of the first build stage, it naturally does not profit from multiple CPUs. This should be no problem because the task of the first stage is simple and supposedly executed quickly. However, for large builds including many targets, we found the time needed for the first stage to become longer than expected. This prompted us to do a bit of profiling.

Tracing the execve syscalls of the first build stage via strace evidenced that the echo commands used for creating the var/libdeps file attributed significantly to the overall time because each echo involves the spawning of a shell. There are two counter measures: First, we eagerly detect already visited libraries and do not visit them again (originally, this detection was performed later when already revisiting the library). This change improves the performance by circa 10%. Second and more importantly, the subsequent echo commands are now batched into one command featuring multiple echoes. This way, only one shell is started for executing the sequence. This improvement leads to a performance improvement of about 300%-500%, depending on the size of the build. Lesson learned: Using make rules as shell scripts is sometimes a bad idea.

Improved libports and ports package handling

We also enhanced the package cleanup rules in libports. Now it's possible to clean up contribution code package-sensitive analog to the preparation of packages. If you don't want to tidy up the whole libports repository, but for instance just the LwIP code, just issue:

make clean PKG=lwip